I recently had to the challenge of demonstrating an AWS EC2 AutoScaling Group.

To set up this demo, I clearly needed to build an AutoScaling Group, Launch Template, Target Group and Application Load Balancer.

However, to get EC2 instances with the necessary software (such as Apache Webserver, and some specific web server pages), I need to identify a suitable AMI.

For demonstration purposes, I therefore needed to build a web server using the relevant User Data, and snapshot this to build a custom AMI which could be referenced by the Launch Template.

Since I use AWS CloudFormation (wherever possible) to build all my demos, this meant that I needed to define a CloudFormation Custom Resource to create the AMI.

This blog explains how I did this. I assume you are basically familiar with CloudFormation templates, so I have not included all the details of each step; instead I have highlighted the main tasks and key code snippets.

The final demo files are available in GitHub here:

https://github.com/dendad-trainer/simple-aws-demos/tree/main/custom-ami-autoscaling

Defining the WebServer

The first task is to define the web server which will be used as the basis for building our AMI.

For cost reasons, I decided to build this using AWS Graviton, using ARM architecture. The Parameter section of the CloudFormation Template looks like this:

Parameters:

AMZN2023LinuxAMIId:

Type : AWS::SSM::Parameter::Value<AWS::EC2::Image::Id>

Default: /aws/service/ami-amazon-linux-latest/al2023-ami-kernel-default-arm64

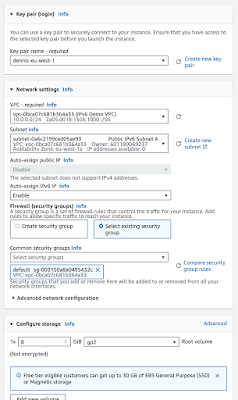

Having defined a VPC with Public and Private subnets, I could then define the EC2 instance. The key part of this Resource Definition is as follows:

Resources:

WebInstance:

Type: 'AWS::EC2::Instance'

Properties:

ImageId: !Ref AMZN2023LinuxAMIId

InstanceType: t4g.micro

.....

UserData:Fn::Base64: !Sub |#!/bin/bash -ex# Use latest Amazon Linux 2023dnf update -ydnf install -y httpd php-fpm php php-devel/usr/bin/systemctl enable httpd/usr/bin/systemctl start httpdcd /var/www/htmlcat <<EOF > index.php<?php?><!DOCTYPE html><html><head><title>Amazon AWS Demo Website</title></head><body><h2>Amazon AWS Demo Website</h2><table border=1><tr><th>Meta-Data</th><th>Value</th></tr><?php# Get the instance IDecho "<tr><td>InstanceId</td><td><i>";echo shell_exec('ec2-metadata --instance-id');"</i></td><tr>";# Instance Typeecho "<tr><td>Instance Type</td><td><i>";echo shell_exec('ec2-metadata --instance-type');"</i></td><tr>";# AMI IDecho "<tr><td>AMI</td><td><i>";echo shell_exec('ec2-metadata --ami-id');"</i></td><tr>";# User Dataecho "<tr><td>User Data</td><td><i>";echo shell_exec('ec2-metadata --user-data');"</i></td><tr>";# Availability Zoneecho "<tr><td>Availability Zone</td><td><i>";echo shell_exec('ec2-metadata --availability-zone');"</i></td><tr>";?></table></body></html>EOF# Sleep to ensure that the file system is synced before the snapshot is takensleep 120# Signal to say its OK to create an AMI from this Instance./opt/aws/bin/cfn-signal -e $? --stack ${AWS::StackName} \

--region ${AWS::Region} --resource AMICreate

This builds the web server that will be used as the basis of the AMI for the AutoScaling Group.

I placed this web server into a Public Subnet, and protected by a Security Group with allows port 80. I also added port 22 to the Security Group Rules, so that I was able to connect to this instance using EC2 Instance Connect.

Synchronising AMI creation

Experience proved that there is a risk that the Snapshot for the AMI could be created before the EC2 filesystem has been fully 'sync'd to disk. To address this risk, I could either use the "reboot" option when creating the Snapshot, or put a suitable 'sleep' command in the Instance UserData to give the Volume time to sync.

I defined a Wait Condition for the /cfn-signal command to trigger, so that the AMI Snapshot only starts after the UserData has completed. The following part of the Resource section captures this:

AMICreate:

Type: AWS::CloudFormation::WaitCondition

CreationPolicy:

ResourceSignal:

Timeout: PT10M

Defining the Custom Resource AMI

The AMI is built using a CloudFormation Custom Resource, which depends upon the Wait Condition:

AMIBuilder:

Type: Custom::AMI

DependsOn: AMICreate

Properties:

ServiceToken: !GetAtt AMIFunction.Arn

InstanceId: !Ref WebInstance

Note that this Custom Resource depends upon the AMICreate wait condition, and requires the InstanceId of the Webserver.

The Lambda Function 'AMIFunction' does the actual processing for this custom resource.

Defining the Lambda Function for the Custom Resource

The Lambda Function is written in Python. This requires a LambdaExecutionRole, which I have included in the GitHub distribution. I have not included all the syntax for this, but just to mention that the following AWS Services need to be enabled:

LambdaExecutionRole:

Type: AWS::IAM::Role

Properties:

RoleName: "Demo-LambdaExecutionRoleForAMIBuilder"

AssumeRolePolicyDocument:

.......

- 'ec2:DescribeInstances'

- 'ec2:DescribeImages'

- 'ec2:CreateImage'

- 'ec2:DeregisterImage'

- 'ec2:CreateSnapshots'

- 'ec2:DescribeSnapshots'

- 'ec2:DeleteSnapshot'

- 'ec2:CreateTags'

- 'ec2:DeleteTags'

The Actual Lambda function itself needs to extract the InstanceId of the EC2 Webserver that was created earlier, and the event RequestType. The latter is set to 'Create', 'Update' or 'Delete', which is the action which the calling CloudFormation stack is currently performing.

def handler(event, context):

# Init ...

rtype = event['RequestType']

print("The event is: ", str(rtype) )

responseData = {}

ec2api = boto3.client('ec2')

image_available_waiter = ec2api.get_waiter('image_available')

# Retrieve parameters

instanceId = event['ResourceProperties']['InstanceId']

The main processing block of this Lambda function needs to handle the 'Update' request type. When the CloudFormation stack is updated, it is assumed that an earlier AMI and snapshot were created. Therefore, the 'Update' code should first delete the old AMI and snapshot, before executing the 'Create' code to build a new one. The following code will therefore find the old snapshot and delete it, an then de-register the custom AMI. Note that this depends upon the old AMI and snapshot having been specifically tagged in the earlier 'Create' operation:

# Main processing block

try:

if rtype in ('Delete', 'Update'):

# deregister the AMI and delete the snapshot

print ("Getting AMI ID")

res = ec2api.describe_images( Filters=[{'Name': 'name', 'Values': ['DemoWebServerAMI']}])

print ("De-registering AMI")

ec2api.deregister_image( ImageId=res['Images'][0]['ImageId'] )

print ("Getting snapshot ID")

res = ec2api.describe_snapshots( Filters=[{'Name': 'tag:Name', 'Values': ['DemoWebServerSnapshot']}])

print ("Deleting snapshot")

ec2api.delete_snapshot( SnapshotId= res['Snapshots'][0]['SnapshotId'] )

responseData['SnapshotId']=res['Snapshots'][0]['SnapshotId']

The next part of this code block deals with the creation of the snapshot and AMI itself, and ensures that they have the correct tags which will be referenced when the stack is deleted later on:

if rtype in ('Create', 'Update'):

# create the AMI

print ("Creating AMI and waiting")

res = ec2api.create_image(

Description='Demo AMI created for autoscaling group',

InstanceId=instanceId,

Name='DemoWebServerAMI',

NoReboot=True,

TagSpecifications=[ {'ResourceType': 'image',

'Tags': [ {'Key': 'Name', 'Value': 'DemoWebServerAMI'} ]},

{'ResourceType': 'snapshot',

'Tags': [ {'Key': 'Name', 'Value': 'DemoWebServerSnapshot'} ]}]

)

image_available_waiter.wait ( ImageIds=[res['ImageId']] )

responseData['ImageId']=res['ImageId']

Finally, I use the cfnresponse utility to send back the signal. The ImageId is sent back in the responseData structure:

# Everything OK... send the signal back

print("Operation successful!")

cfnresponse.send(event,

context,

cfnresponse.SUCCESS,

responseData)

except Exception as e:

print("Operation failed...")

print(str(e))

responseData['Data'] = str(e)

cfnresponse.send(event,

context,

cfnresponse.FAILED,

responseData)

#return True

Defining the AutoScaling Group using the AMI

The remainder of the CloudFormation template was relatively straightforward, defining the ElasticLoadBalancingV2 resources.

The resources I needed to define in CloudFormation are:

- AWS::ElasticLoadBalancingV2::TargetGroup

- AWS::ElasticLoadBalancingV2::LoadBalancer

- AWS::ElasticLoadBalancingV2::Listener

- AWS::EC2::LaunchTemplate

- AWS::AutoScaling::AutoScalingGroup

- AWS::AutoScaling::ScalingPolicy

DemoAutoScalingLaunchTemplate:

Type: AWS::EC2::LaunchTemplate

Properties:

LaunchTemplateData:

ImageId: !GetAtt AMIBuilder.ImageId

InstanceType: 't4g.micro'

Monitoring:

Enabled: 'true'

SecurityGroupIds:

- !Ref WebSecurityGroup

Conclusions

This turned out to be a helpful exercise in creating CloudFormation Custom resources, and also passing back attributes using the cfnresponse.send utility.

Specific lessons I have noted are:

- Allow time for the EBS volume on the source server to be sync'd. If not, you need to use the "reboot" option when building the AMI

- Ensure that the 'Update' scenario is coded for in the Lambda function.

- Proper tagging of resources, specifically the EBS snapshot and the AMI, ensure that you can reference them at some future date.

To conclude, the following snippet shows how to extract the attributes from the initial web server, the load balancer and the ImageId which is passed back by the cfnresponse.send utility from the Lambda function:

Outputs:

WebServer:

Value: !GetAtt WebInstance.PublicIp

Description: Public IP address of Web Server

AMIBuilderRoutine:

Value: !GetAtt 'AMIBuilder.ImageId'

Description: Image created by the AMI Builder routine

DNSName:

Value: !GetAtt DemoLoadBalancer.DNSName

Description: The DNS Name of the Elastic Load Balancer

The full CloudFormation template is available in GitHub here:

https://github.com/dendad-trainer/simple-aws-demos/tree/main/custom-ami-autoscaling